In a world of digital photography and social media, we often want to look amazing, and modern computer vision techniques allows us to do just that, right up to changing our facial features. Have you ever wondered about the technical side of things? How exactly do algorithms driven by artificial intelligence apply their magic to make ai attractive faces?

The set of tools for improving facial aesthetics in photos is very broad, ranging from basic color adjustments to more complex facial adjustments. To make a face in a photo more attractive, one can:

• Perform general color correction: adjust brightness, saturation, hues, and lighting.

• Retouch the skin: even out skin tone, remove blemishes, and smooth out wrinkles.

• Modify facial features, for example, enlarge eyes, reduce the nose, make lips smaller but more plump, and make the face more symmetrical.

• Add decorative elements: makeup, rhinestones, tattoos, costume jewelry.

In this article, we will look at algorithms for automatic skin retouching: how to add such functionality to your application and what capabilities modern commercial solutions offer.

Curious about other AI algorithms in your smartphone, like computer vision face recognition? Dive into our detailed exploration in "Computer Vision Applications in your smartphone" article.

Imagine you have a photo and you want to make it look nicer — like smoothing out wrinkles or making the skin tone even. There are special algorithms that can do this for you. Usually, such algorithms answer two main questions:

1. Where to make changes in the photo? We need to know which parts of the picture are skin that might need smoothing or other adjustments.

2. How to make those changes? We need to figure out what exactly to do to make the skin look better.

With the rise of more complex DL models, people try to combine multiple steps into a single model which results in:

3. End-to-end solution. We train a neural network to handle both tasks simultaneously: you input a photo, and it outputs that photo with improved skin appearance. The primary benefit of this method is its simplicity; there's no need to switch between different algorithms for identifying which areas to enhance and then applying those enhancements. Instead, you have a single model that manages the entire process. We will explore such combined solutions in detail.

Now let's take a look at different approaches to solving these tasks.

Note: In the following review, a reference to the algorithm's implementation is provided in square brackets if available.

Before we can enhance a photo, we need to know where to focus our efforts. This section looks at how to identify the parts of a photo that need retouching. We'll also mention some key studies and methods that have shaped the way we approach this task.

Identifying the facial region, or 'face bounding box', is a straightforward and effective first step. This technique locates the face within the photo, creating a 'box' around it to focus our retouching efforts. This region of interest (RoI) can be used directly, as in Facial skin beautification via sparse representation over learned layer dictionary, or further refined for specific enhancements.

Modern computer vision face detection leverages deep neural networks for accuracy. Techniques like training an object detector such as CenterNet [Python] or YOLOX [Python] on face datasets (e.g., WIDER Face), or employing pre-trained models are common practices. The key is choosing a model that ensures the entire face is within the bounding box for optimal results.

For instance, the ArcFace algorithm [InsightFace project, PyTorch] is effective for this purpose, providing full coverage of the face. In contrast, the algorithm from MediaPipe might not fully meet the criteria for certain tasks due to its detection scope.

Sometimes it may be beneficial to know the borders of the face area with pixel precision — think about occlusions like sunglasses or hair. Also, we need to know the precise locations of facial features to apply different beautification techniques to the skin and e.g. eyes. This process, known as segmentation, generates a 'skin mask' that marks the exact regions of the face to be retouched.

Below are methodologies for creating a skin mask:

• Facial Key Points Detection: Techniques such as the Ensemble of Regression Trees (FabSoften) and Active Shape Models (Facial Skin Beautification Using Region-Aware Mask), alongside neural networks, can identify facial landmarks. These landmarks guide the creation of contours and areas for retouching.

• Segmentation Techniques: Methods such as the Gaussian Mixture Model and neural network-based segmentation accurately isolate the skin regions from the rest of the image. These approaches are detailed in works like An algorithm for automatic skin smoothing in digital portraits (FabSoften).

• Edge-Aware Smoothing Filters: Applying a global edge-aware filter creates a skin mask by focusing on facial key points and enhancing them with strong edges, minimizing gradients except at the edges with high contrast. This method is demonstrated in Facial Skin Beautification Using Region-Aware Mask.

• Guided Feathering: This technique [Matlab, OpenCV] refines the skin mask, particularly around challenging areas like hair. Guided Feathering is used for mask refinement in the FabSoften study, showcasing its effectiveness in achieving smoother transitions.

The study Band-Sifting Decomposition for Image Based Material Editing [R] operates under the assumption that a face skin mask is provided along with the photo, highlighting the importance of this component in the retouching workflow.

Creating an accurate face skin mask is foundational to achieving nuanced and natural-looking photo retouching. By employing these advanced techniques, we can precisely target and enhance specific facial features, elevating the overall aesthetic of digital portraits.

Sometimes, photos have very expressed imperfections that require targeted attention. To effectively address these, it's crucial to identify and localize specific areas for more intensive treatment. This step, or mode, focuses on detecting individual flaws such as:

• Spots: Utilizing the Canny Edge detector as demonstrated in FabSoften, spots are localized for precise correction.

• Wrinkles: Addressed through specialized neural networks, with methods outlined in Photorealistic Facial Wrinkles Removal, focusing on the delicate removal of wrinkles for a smoother appearance.

Such detailed processing is necessary when general skin retouching algorithms fall short in handling various types of imperfections effectively.

Note: The masks created for these imperfections can be either boolean (indicating presence or absence) or float (providing a degree of intensity).

The strategies mentioned can be employed in combination for a comprehensive approach, as seen in FabSoften, or used individually to target specific issues.

After computer vision face recognition is completed and identifying areas where the photo needs improvement, the next question is how to do it. This section covers the image processing techniques in computer vision used to enhance skin texture in the areas we've localized. We'll explore practical methods for refining skin, aiming for a natural and polished finish.

To enhance skin texture, a clear strategy involves utilizing computer vision methods that smooth out low-gradient areas while keeping the edges sharp. These algorithms fall into two categories: local and global.

• The Bilateral Filter [OpenCV] smoothens the image while preserving edges by considering the intensity difference between neighboring pixels.

• The Weighted Median Filter [OpenCV] is another tool for noise reduction without blurring edges.

• The Guided Filter [Matlab, OpenCV] is effective for edge-preserving smoothing.

• The Domain Transform filter [OpenCV] quickly reduces noise while preserving important details like edges.

Local filters calculate a new pixel value as a weighted sum of nearby pixel values, with weights depending on either the pixel values themselves or additional guidance. For example, a Bilateral Filter uses the intensity differences between neighboring pixels to maintain edges during smoothing.

Parameters such as window size and blurring strength are hyperparameters; they can be either fixed or adaptive, as seen in the Attribute-aware Dynamic Guided Filter from FabSoften, which adjusts parameters based on detected facial imperfections and the skin mask.

• L0 smoothing [Matlab] is a method that simplifies an image by selectively smoothing areas while preserving significant edges and features

• Fast Global Smoothing [C++, OpenCV], which offers an efficient approach to reducing image noise and minor details across the entire image

• Mutually Guided Filter [Matlab], designed to enhance the mutual guidance between images

These remove minor details while preserving dominant structures by optimizing a global function. This function is formulated such that its minimum point will be an image that is as close as possible to the original and has minimal gradients in each pixel, except those where there is a strong gradient in the original image. Unlike local methods, the values of all pixels of the new image depend on all pixels of the original.

While these algorithms excel at smoothing, they can sometimes overly simplify the skin's natural texture, leading to a less realistic appearance. The techniques discussed in the following section can help preserve texture, ensuring a more natural result.

Layer decomposition is a technique where an image is divided into several layers, such as detail, lighting, and color. These layers can transform either through learnable (AI-driven) or non-learnable methods before being recombined to form the final image. This process allows for targeted enhancements and modifications.

• Facial skin beautification via sparse representation over learned layer dictionary: This method breaks down the image into detail, light, and color layers. The detail layer is then swapped with one from a pre-built dictionary containing an array of faces, enabling precise skin enhancements.

• Band-Sifting Decomposition for Image-Based Material Editing [R]: Here, an image undergoes sequential decomposition into various layers based on frequency and amplitude, facilitating edits that affect the skin's appearance, such as oiliness and wrinkles.

• High Pass Skin Smoothing [CoreImage]: By separating high and low frequencies, this approach allows for specific frequency adjustments, smoothing the skin while preserving essential textures.

• Facial Skin Beautification Using Region-Aware Mask: This computer vision technique employs the CIELAB color space and edge-aware smoothing to decompose an image, allowing for detailed adjustments to the skin's detail, lighting, and color.

• Skin Texture Restoration (from FabSoften): Utilizing wavelet domain decomposition, this method separates the image into low and high-frequency components, followed by recombination that restores texture while maintaining overall smoothness.

Layer decomposition offers an essential advantage in skin beautification: it enables detailed control over the enhancement process. By adjusting specific layers, editors can target imperfections, adjust lighting, and modify color without compromising the natural texture and quality of the skin. This approach ensures that enhancements remain realistic, preserving the subject's natural appearance while achieving a polished, professional result. It strikes a balance between correction and preservation, essential for high-quality, lifelike photo retouching.

Generative models represent a powerful class of algorithms in image processing, particularly useful for enhancing images by removing imperfections.

Inpainting is a prime example of how generative models facilitate precise changes in images. When it comes to removing pre-localized imperfections — like wrinkles, spots, or unwanted objects — the model first identifies these areas. It then predicts what the underlying, imperfection-free area should look like based on its training. This process involves generating pixels that seamlessly blend with the surrounding image areas, effectively "filling in" the gaps with content that appears natural and coherent with the entire image.

Techniques like these are showcased in works such as Photorealistic Facial Wrinkles Removal, where generative models are trained to recognize and reconstruct facial regions without wrinkles, achieving results that retain the subject's likeness while enhancing their appearance.

Modern computer vision techniques, especially DL models (e.g. generative ones) allow combining multiple tasks into a single end-to-end (e2e) solution.

These models streamline the photo retouching workflow by automating both the identification of areas needing modification and the application of those modifications. This approach is highly efficient, as it eliminates the need to manually combine various algorithms for different tasks.

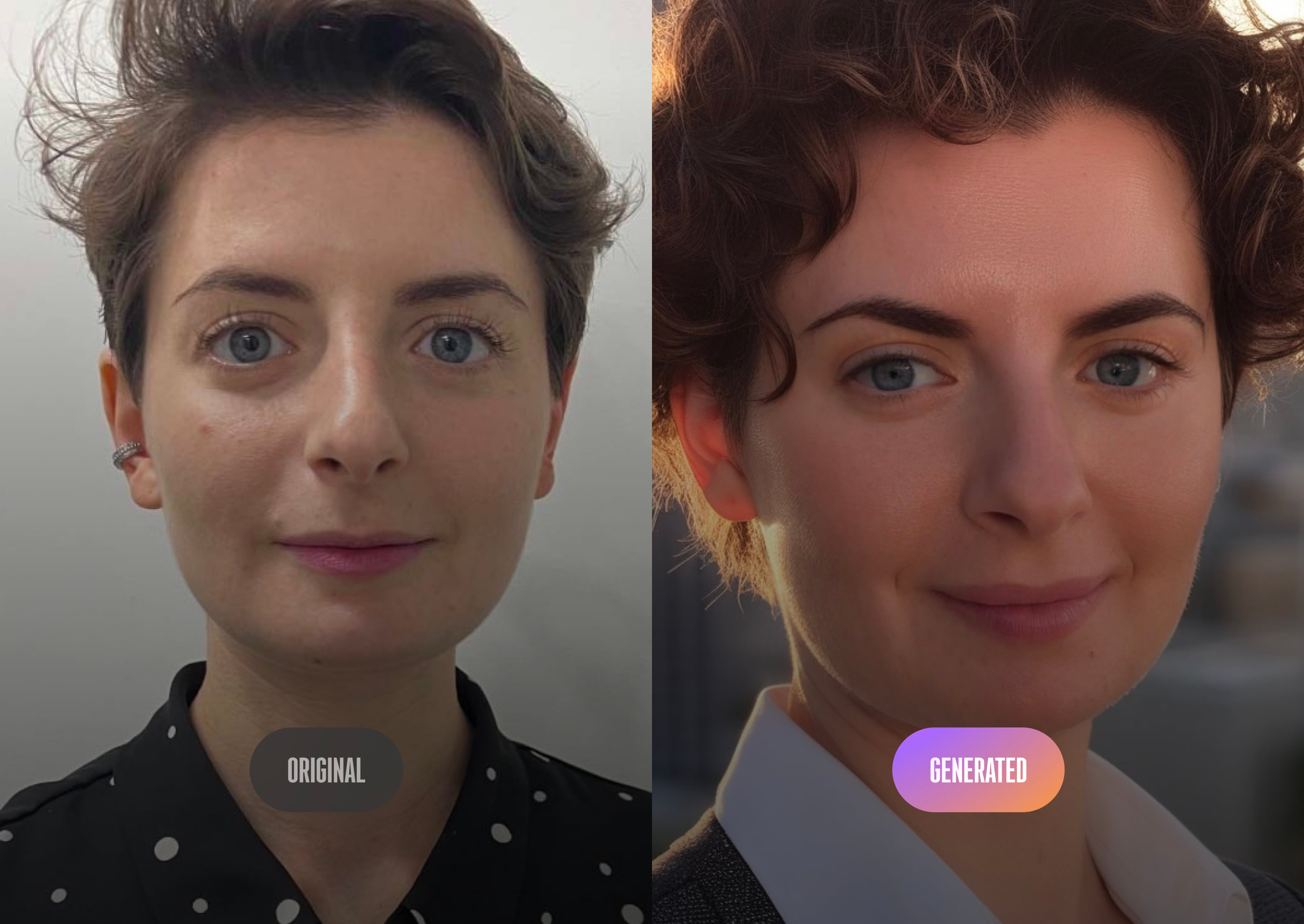

End-to-end generative models are trained on comprehensive datasets that include examples of both unedited and professionally retouched images. By analyzing these pairs, the models learn not just what features need to be enhanced but also how to perform these enhancements to meet professional standards. An example of this method is AutoRetouch, which demonstrates the capability to transform images holistically, improving aspects like skin texture, lighting, and color balance across the entire image.

Generative models are designed to remove skin imperfections while keeping the skin's natural texture, aiming for results that look real and not overly edited.

However, end-to-end models like Stable Diffusion can sometimes alter faces too much, making people look different from their actual appearance. This is a key risk with these models: they process everything at once, so if they make a mistake in one area, it affects the whole image. Unlike using separate steps for each task, where you can check and fix issues in specific areas like wrinkle detection, end-to-end models don't allow for easy adjustments to individual parts of the process.

After exploring various methods for skin enhancement, let's now see what's available in open access for improving our photos.

Despite the high-quality skin retouching demonstrated by FabSoften and AutoRetouch, their source code is not openly available, so we were able to test only a limited number of approaches.

Here are the results from a few open-source repositories we found:

• Face-Smoothing: Detection and Beautification, focusing on facial feature enhancement.

• Bilateral Filter (OpenCV), which applies edge-preserving smoothing.

• An unofficial implementation of FabSoften for dynamic skin smoothing.

• Google_camp (Human beautifying and Image matting), which offers tools for both beautification and advanced image processing tasks.

We've examined skin retouching methods detailed in academic research, but could there be more sophisticated techniques? How do commercial products compare in terms of quality visually? In the following section, we'll evaluate several leading commercial solutions to see how they perform.

Exploring the capabilities of algorithms within commercial applications reveals some impressive results. Here's a look at some notable platforms (💰 indicates services that require a paid subscription) to make our attractive face with ai:

1. Visage Lab™ offers a retouch feature that's accessible through:

3. Facetune™ provides an enhance feature to fine-tune your photos 💰

4. BeautyPlus™ includes multiple retouching options:

Facetune and several BeautyPlus features allow users to control the intensity of adjustments, offering personalized results. In contrast, some options deliver fixed enhancements.

These apps showcase remarkable advancements in photo retouching technology, and we extend our gratitude to the developers for their innovation and dedication. Please note that this list is not exhaustive, but it highlights some of the powerful tools available for enhancing digital images.

Let's evaluate capabilities of commercial apps on test examples.

• Changed the color palette of the entire image: VisageLab Mobile (added a purple hue), BeautyPlus Auto (lightened), BeautyPlus HD (increased color saturation).

• Changed only the face color: VisageLab Web, Facetune, BeautyPlus Concealer (made the skin tone warmer, with a blush effect).

• All algorithms, except for BeautyPlus Flatten, evened out skin tone and covered some red spots. BeautyPlus Concealer did this the best.

• All algorithms smoothed out wrinkles.

• Changed facial features to align with "beauty standards": BeautyPlus Auto (enlarged eyes, reduced nose and jaw), Facetune (brightened eyes, more expressive eyelashes, lifted the tips of the lips). The following videos demonstrate the algorithms' work more clearly.

VisageLab Mobile and VisageLab Web's results worsened, with VisageLab Mobile showing artifacts like brightened spots on the forehead. Other algorithms' results did not change significantly.

a. The lower half of the face is covered by a hand.

All computer vision methods except VisageLab Mobile and VisageLab Web blurred the hand.

b. The left half of the face is covered by a hand.

VisageLab Mobile and VisageLab Web could not detect the face and therefore could not process it.

BeautyPlus Auto, BeautyPlus HD, BeautyPlus Concealer failed to remove the red spot on the forehead next to the hand, although they successfully did so in the previous photo without the hand. The hand looks less blurred than before.

c. Face obstructed diagonally by an object.

As before, VisageLab Mobile and VisageLab Web could not detect the face in the photo.

Facetune blurred the text on the cookie package.

BeautyPlus Concealer did not perform retouching on the part of the face above the package.4. Photo of a face in poor lighting.

All computer vision based techniques managed to retouch the skin, and BeautyPlus Auto and BeautyPlus HD also improved the lighting.

This case study is interesting because it assumes more accurate operation of the computer vision face detection algorithm. As the result, the skin retouching was performed, but less effectively than in previous examples.

6. Photo with two faces.

All algorithms retouched both faces in the photo.

a. Porcelain mask covering the entire face.

VisageLab Mobile and VisageLab Web smoothed elements of the mask's ornament, with VisageLab Mobile creating brightened spots in the nasolabial area.

b. Mask around the eyes

VisageLab Mobile and VisageLab Web could not detect the face in the photo.

BeautyPlus Flatten blurred the mask under the right eye.

VisageLab Mobile and VisageLab Web failed to detect the face in the photo.

None of the algorithms removed the scar from the face.

After testing a variety of commercial applications across different scenarios, it's clear that each has its strengths and areas for improvement. From enhancing underwhelming skin conditions to addressing challenging obstructions and lighting, these tools offer a range of solutions for photo retouching. However, limitations become apparent with more complex cases, such as obscured faces or unique facial features, highlighting the importance of ongoing development in this field.

We extend our thanks to the creators of these applications for pushing the boundaries of what's possible in digital beautification. Please note that this review, while comprehensive, does not cover the entirety of available tools and techniques in the fast-paced domain of image enhancement. It highlights the importance of choice in selecting the right tool for specific needs and encourages continued exploration and innovation in the field.

Automatic computer vision techniques for skin retouching have achieved remarkable quality, but there is still room for improvement.

While the code for advanced approaches might not be readily available, the possibility of replicating the algorithms by FabSoften or AutoRetouch seems quite feasible. Also, solutions based on generative models appear to be the most promising in terms of quality.

If you're planning to add AI facial beautification to your projects, our team at OpenCV.ai is ready to assist with integration. Check out our Services page for more information. Thank you for reading, and stay tuned for future guides on AI algorithms.