The creation of self-driving cars marks a significant shift in the way we think about transportation. These autonomous vehicles are built on an advanced combination of technologies that allows these vehicles to safely and efficiently navigate the roads without human intervention.

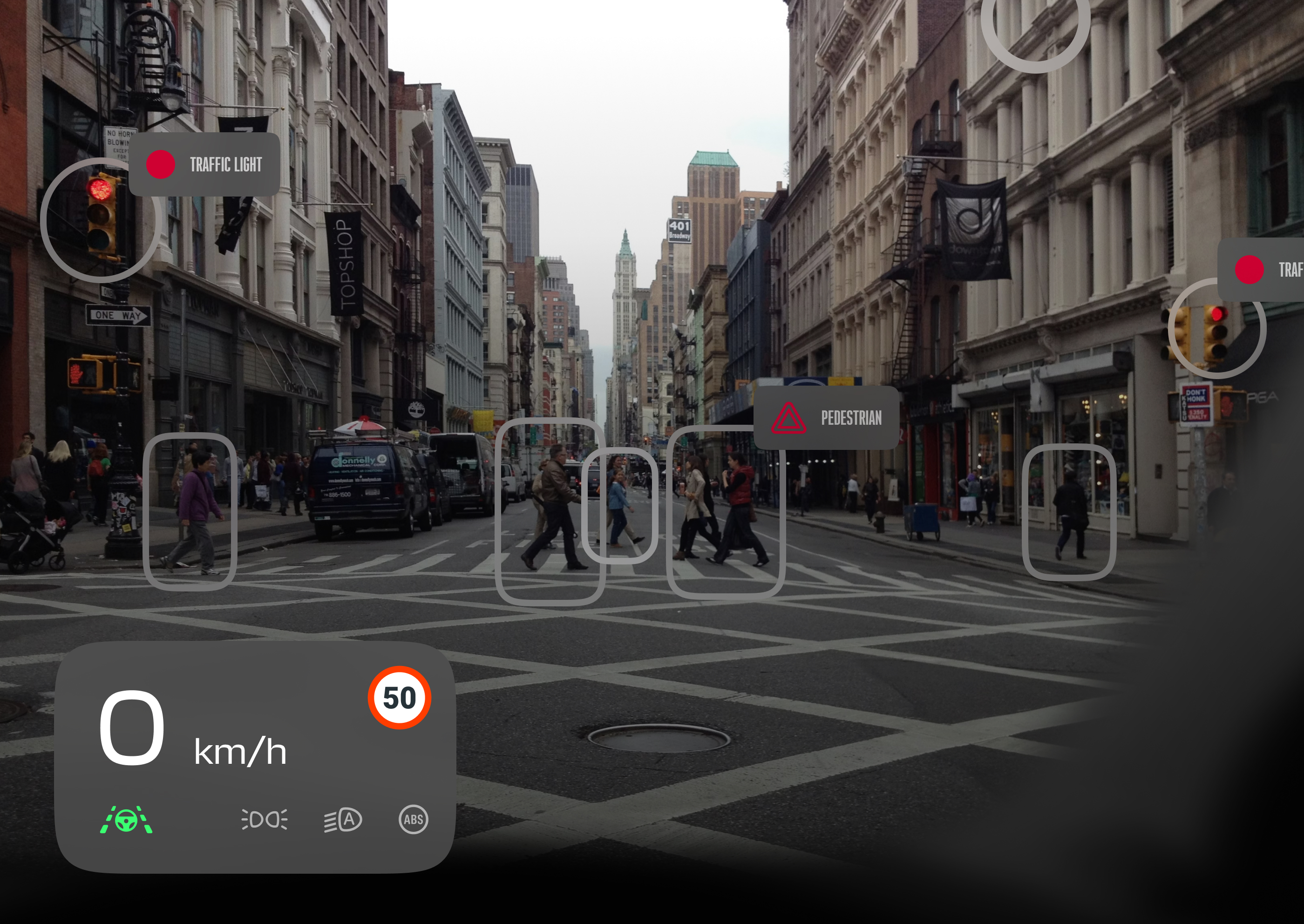

One important part of a self-driving car is computer vision. This helps the car see and understand things around it, like roads, traffic, people walking, and other objects. The car uses cameras and sensors to get this information. Then it uses what it sees to make quick decisions and drive safely in different road conditions.

In this article, we'll talk about how computer vision works in these cars. We'll cover how the car detects objects, processes data from a special tool called LIDAR, analyzes scenes, and plans its route.

Self-driving cars use a mix of sensors, cameras, and smart algorithms to move around safely. They need two main things to do this: computer vision and machine learning.

Computer vision acts like the car's eyes. It uses cameras and sensors to take pictures and videos of everything around the car. This includes road lines, traffic lights, people, and other cars. The car then uses special techniques to understand these pictures and videos.

Machine learning is like the car's brain. It looks at the data from the cameras and sensors. Then it uses special algorithms to find patterns, make predictions, and learn from new information. This helps the car make smart decisions, handle new situations, and get better over time.

Object Detection: A big part of making self-driving cars work is teaching them to detect multiple objects on the road around them. The car uses cameras and sensors to distinguish between elements like other vehicles, pedestrians, road signs, and obstacles. The car uses advanced computer vision methods to quickly and accurately recognize these objects in real time.

Object Tracking: After the car detects something, it needs to monitor it, especially if it's moving. This is important for understanding where things like other cars and people might go next, which is crucial for path planning and collision avoidance. The car looks at how these objects move over time to guess where they will be next. Computer vision algorithms accomplish it.

Analyzing LIDAR Data: LIDAR is a special tool that helps self-driving cars understand their surroundings in 3D. It works like radar but uses light instead of radio waves. Unlike cameras, LIDAR is not affected by lighting conditions, making it reliable in various environments and weather conditions. The LIDAR data is analyzed to create a detailed 3D map of the environment, including the detection of objects, their distance, and their relative speed.

Self-driving cars don't just use one tool or sensor to understand what's around them. They use a bunch of them together, like cameras, LIDAR (a light-based radar), regular radar, GPS, and ultrasonic sensors (which use sound to detect objects). This combination of tools helps the car construct a precise picture of its surroundings.

This process is called sensor fusion. It's like putting together pieces of a puzzle. Each tool has its strengths and weaknesses. For example, cameras are great for seeing details, LIDAR is good at measuring how far away things are, and radar can see objects even in bad weather. By combining all the information from these tools, self-driving cars can better understand the world around them. This is important for the car to make safe and smart driving decisions.

After understanding how self-driving cars use a mix of tools for a clearer picture of their surroundings, let's dive into two specific technologies that play a crucial role: YOLO for Object Detection and Deep SORT for keeping track of them.

What is YOLO: YOLO stands for 'You Only Look Once.’ It's a fast object detection model for the car to quickly spot different things like cars, people, and street signs at one glance. It divides the input image into a grid, and each grid cell simultaneously predicts bounding boxes and class probabilities, enabling the system to detect multiple objects in one go.

Why YOLO is Good for Self-Driving Cars: YOLO processes data in real-time, which is perfect for self-driving cars that need to detect objects around them quickly. YOLO's ability to provide rapid detection aids in the timely response of the vehicle to dynamic road conditions.

What is Deep SORT: Deep SORT (Simple Online and Realtime Tracking with a Deep Association Metric) builds upon the SORT (Simple Online and Realtime Tracking) algorithm, adding deep learning features to improve tracking accuracy. It integrates appearance information to maintain the identity of objects over time, even during occlusion or when objects momentarily leave the frame.

Deep SORT in Self-Driving Cars: For self-driving cars, it's important to keep track of where objects move around them. Deep SORT helps the car predict where these objects will move, which is key for planning where to drive and avoiding crashes.

Using Deep SORT: When a self-driving car uses Deep SORT, it takes the objects detected by YOLO and tracks their paths. This is especially helpful in busy city streets where people, bikes, and cars are moving differently.

After discussing how self-driving cars use YOLO to detect objects and Deep SORT to track their movements quickly, let's focus on another key technology: LIDAR. This is all about how cars understand the world in 3D.

What is LIDAR: LIDAR, which stands for Light Detection and Ranging, helps cars create 3D maps of their surroundings. It works by shooting out laser beams that bounce back after hitting objects. The car calculates how long it takes for the light to return to measure distances. This process creates a 'point cloud,’ a detailed 3D model of the area around the car.

Why LIDAR is Important: LIDAR is good at accurately measuring distances and creating high-resolution maps. This is super important for self-driving cars to understand their surroundings precisely. Another plus is that LIDAR works well in all lighting conditions, making it reliable day and night.

LIDAR as Part of a Bigger System: While LIDAR is powerful, it's usually used alongside cameras and radar. This combination gives the car a more complete and accurate view of its environment, which is crucial for safely navigating complex road situations.

Making Sense of LIDAR Data: Turning LIDAR data into practical information involves complex algorithms. These algorithms filter and interpret the point cloud data, identifying and classifying objects like cars, pedestrians, and road signs. They analyze the shape and movement within the point cloud, which is crucial for detecting and predicting the behavior of various elements on the road.

The Role and Challenges of LIDAR in Self-Driving Cars: LIDAR plays a big part in helping cars 'see' in 3D, which is vital for safe and effective driving decisions. But, even though it's really useful, LIDAR still faces challenges like cost, the size of the sensors, and how to process all the data it collects. These challenges are driving more advancements in this area.

Having looked at how LIDAR helps self-driving cars understand their environment in 3D, we now move to two more sophisticated methods that further enhance the car's understanding of its surroundings: semantic segmentation and depth estimation.

Semantic segmentation is a key part of how self-driving cars process images. It involves dividing a digital picture into different parts or groups of pixels. Each pixel in a segmented image is assigned a label, allowing the vehicle's system to interpret what it 'sees' in terms of objects like roads, pedestrians, vehicles, and traffic signs. This helps the car get a detailed and organized view of its environment.

Depth estimation adds another important piece of information: how far things are from the car. It's about figuring out the distance of each point in an image from the car's camera. This is key for understanding the 3D structure of everything around the car. There are different ways to do this, like using two cameras to mimic how humans see depth or special light techniques to measure how long light bounces back from objects.

Together, semantic segmentation and depth estimation give self-driving cars a detailed and complete understanding of their surroundings. They help the car accurately detect objects, measure distances, and assess risks, which are crucial for safe driving and making good decisions on the road. These methods show how advanced self-driving car technology is becoming, moving towards a future where cars can navigate with an almost human-like understanding of the world around them.

As we look ahead, self-driving cars are set to get even smarter and more capable. Here are some of the big trends and challenges:

Smarter Cars with Better AI: As artificial intelligence (AI) gets better, it will make self-driving cars even smarter in making decisions. This goes hand-in-hand with improvements in sensors like LIDAR and radar, which help cars 'see' better in different conditions.

Smart City Integration: Imagine cars that can talk to each other and the city's traffic systems. This could lead to smoother traffic, fewer jams, and safer roads. It's all about connecting cars to the smart city infrastructure.

Safety First: A big challenge is making sure these cars are safe. They must work well in all situations, even ones they haven't seen before. Creating failsafe ways for cars to handle surprises is complex but necessary.

Reliability: These cars need to work well all the time, no matter where they are or what the weather is like. They also have to deal with unpredictable things people might do on the road. Being reliable like this is key for everyone to trust and accept them.

Winning Public Trust: Getting people to trust self-driving cars is not just about showing they're safe and reliable. It's also about addressing worries like job losses, privacy, and security. Teaching the public about the benefits and limits of these cars and being open about how they work will help build this trust.

In conclusion, while the journey of self-driving cars is full of exciting advancements, it also comes with significant challenges that need careful attention. Overcoming these hurdles is crucial for these cars to become a common sight on our roads, changing how we travel for the better.